- Home

- Salesforce

- Salesforce Certified Platform Developer II (PDII)

- Salesforce.PDII.v2024-03-02.q90

- Question 59

Valid PDII Dumps shared by ExamDiscuss.com for Helping Passing PDII Exam! ExamDiscuss.com now offer the newest PDII exam dumps, the ExamDiscuss.com PDII exam questions have been updated and answers have been corrected get the newest ExamDiscuss.com PDII dumps with Test Engine here:

Access PDII Dumps Premium Version

(204 Q&As Dumps, 35%OFF Special Discount Code: freecram)

<< Prev Question Next Question >>

Question 59/90

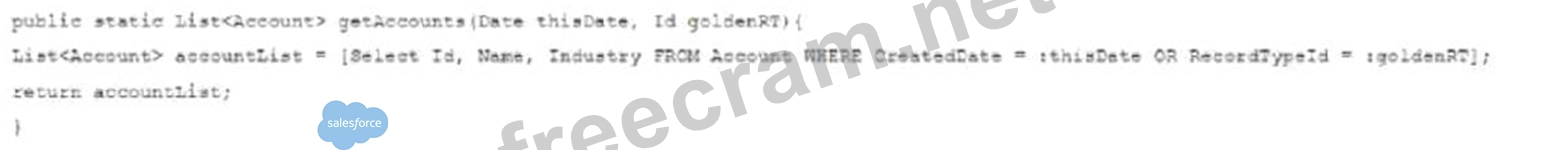

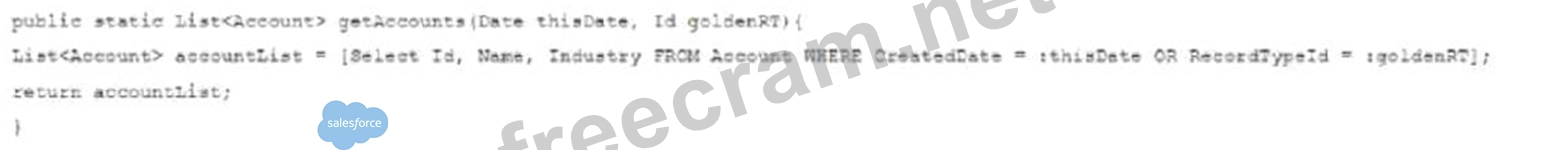

Consider the following code snippet:

The Apex method is executed in an environment with a large data volume count for Accounts, and the query is performing poorly.

Which technique should the developer implement to ensure the query performs optimally, while preserving the entire result set?

The Apex method is executed in an environment with a large data volume count for Accounts, and the query is performing poorly.

Which technique should the developer implement to ensure the query performs optimally, while preserving the entire result set?

Correct Answer: D

When dealing with large data volumes in Salesforce and ensuring optimal performance for queries, it's important to choose an approach that both handles the data volume efficiently and retrieves the complete result set.

Option D is correct because using the Database.QueryLocator method is a best practice for handling large data volumes. It is typically used with batch Apex, which can process records in batches, thus reducing the likelihood of hitting governor limits. It's designed to handle very large data sets that would otherwise exceed normal SOQL query limits.

Option A is incorrect because creating a formula field doesn't improve the performance of the query. It simply creates a new field that combines existing data, but it does not inherently optimize the query execution.

Option B is incorrect because breaking down the query into two parts and joining them in Apex could potentially be less efficient and would require additional code to manage the combined result sets. This approach does not leverage the built-in Salesforce features designed to handle large data volumes.

Option C is incorrect because the @Future annotation makes the method execute asynchronously, but it does not help with query performance or large data volume management.

References:

Salesforce Documentation on Working with Very Large SOQL Queries: Working with Very Large SOQL Queries Salesforce Documentation on Using Batch Apex: Using Batch Apex

Option D is correct because using the Database.QueryLocator method is a best practice for handling large data volumes. It is typically used with batch Apex, which can process records in batches, thus reducing the likelihood of hitting governor limits. It's designed to handle very large data sets that would otherwise exceed normal SOQL query limits.

Option A is incorrect because creating a formula field doesn't improve the performance of the query. It simply creates a new field that combines existing data, but it does not inherently optimize the query execution.

Option B is incorrect because breaking down the query into two parts and joining them in Apex could potentially be less efficient and would require additional code to manage the combined result sets. This approach does not leverage the built-in Salesforce features designed to handle large data volumes.

Option C is incorrect because the @Future annotation makes the method execute asynchronously, but it does not help with query performance or large data volume management.

References:

Salesforce Documentation on Working with Very Large SOQL Queries: Working with Very Large SOQL Queries Salesforce Documentation on Using Batch Apex: Using Batch Apex

- Question List (90q)

- 2 commentQuestion 1: A developer created a Lightning web component that uses a Li...

- 3 commentQuestion 2: Universal Containers uses a custom Lightning page to provide...

- Question 3: A company has a Lightning page with many Lightning Component...

- 4 commentQuestion 4: Consider the below trigger intended to assign the Account to...

- Question 5: Universal Containers wants to use a Customer Community with ...

- Question 6: A developer needs to implement a historical Task reporting f...

- 3 commentQuestion 7: A Salesforce org has more than 50,000 contacts. A new busine...

- 4 commentQuestion 8: A developer is tasked with creating a Lightning web componen...

- Question 9: After a platform event is defined in a Salesforce org,events...

- 3 commentQuestion 10: What are three reasons that a developer should write Jest te...

- Question 11: Consider the following code snippet: (Exhibit) As part of th...

- 6 commentQuestion 12: A developer created the following test method: (Exhibit) The...

- Question 13: A developer created and tested a Visualforce page in their d...

- Question 14: A developer notices the execution of all the test methods in...

- Question 15: A company's support process dictates that any time a case is...

- 2 commentQuestion 16: Which three Visualforce components can be used to initiate A...

- Question 17: A developer wrote the following method to find all the test ...

- Question 18: Consider the following code snippet: (Exhibit) A developer c...

- Question 19: A developer built an Aura component for guests to self-regis...

- Question 20: A company has a custom object, Order__c, that has a custom p...

- Question 21: A developer is writing a Visualforce page that queries accou...

- Question 22: Universal Containers is leading a development team that foll...

- Question 23: A developer is tasked with ensuring that email addresses ent...

- Question 24: A developer created a Lightning web component mat allows use...

- Question 25: A company notices that their unit tests in a test class with...

- Question 26: Exhibit. (Exhibit) Given the code above, which two changes n...

- Question 27: A company has a native iOS order placement app that needs to...

- Question 28: A developer is writing a Jest test for a Lightning web compo...

- Question 29: A developer has a Visualforce page that automatically assign...

- Question 30: A developer needs to store variables to control the style an...

- Question 31: Consider the controller code below that is called from an Au...

- Question 32: Universal Containers needs to integrate with their own, exis...

- Question 33: Which statement is considered a best practice for writing bu...

- 1 commentQuestion 34: Universal Containers is using a custom Salesforce applicatio...

- Question 35: What is a benefit of JavaScript remoting over Visualforce Re...

- Question 36: Which method should be used to convert a Date to a String in...

- Question 37: Universal Containers uses Big Objects to store almost a bill...

- Question 38: Which technique can run custom logic when a Lightning web co...

- 3 commentQuestion 39: A developer created 2 class that implements the Queueable In...

- 2 commentQuestion 40: Given the following containment hierarchy: What is the corre...

- Question 41: A developer is debugging an Apex-based order creation proces...

- Question 42: A developer is inserting, updating, and deleting multiple li...

- Question 43: When the sales team views an individual customer record, the...

- Question 44: A developer is responsible for formulating the deployment pr...

- 2 commentQuestion 45: A developer wrote a class named asccuntRisteryManager that r...

- 4 commentQuestion 46: A developer created a Lightning web component for the Accoun...

- Question 47: A developer writes a Lightning web component that displays a...

- 1 commentQuestion 48: When developing a Lightning web component, which setting dis...

- 1 commentQuestion 49: Refer to the following code snippets: A developer is experie...

- Question 50: Refer to the exhibit (Exhibit) Users of this Visualforce pag...

- Question 51: A developer wrote an Apex class to make several callouts to ...

- Question 52: A developer has a test class that creates test data before m...

- Question 53: A company uses Dpportunities to track sales to their custome...

- Question 54: A developer is developing a reusable Aura component that wil...

- Question 55: When the code is executed, the callout is unsuccessful and t...

- Question 56: Which Salesforce feature allows a developer to see when a us...

- 5 commentQuestion 57: A company has a custom component that allows users to search...

- Question 58: A company has an Apex process that makes multiple extensive ...

- 2 commentQuestion 59: Consider the following code snippet: (Exhibit) The Apex meth...

- Question 60: Universal Containers develops a Salesforce application that ...

- Question 61: Which interface needs to be implemented by an Aura component...

- Question 62: A developer wrote a trigger on Opportunity that will update ...

- Question 63: Universal Containers analyzes a Lightning web component and ...

- Question 64: An Apex trigger and Apex class increment a counter, Edit __C...

- Question 65: A company has a web page that needs to get Account record in...

- Question 66: A user receives the generic "An internal server error has oc...

- Question 67: A developer is building a Lightning web component that retri...

- Question 68: A page throws an 'Attempt to dereference a null object' erro...

- 2 commentQuestion 69: An Apex class does not achieve expected code coverage. The t...

- Question 70: A developer is tasked with creating a Lightning web componen...

- Question 71: A developer created a Lightning web component that uses a li...

- 2 commentQuestion 72: A developer created a JavaScript library that simplifies the...

- Question 73: Just prior to a new deployment the Salesforce administrator,...

- 4 commentQuestion 74: A developer needs to implement a system audit feature that a...

- Question 75: For compliance purposes, a company is required to track long...

- Question 76: A company has reference data stored in multiple custom metad...

- Question 77: Refer to the test method below: (Exhibit) The test method ca...

- 1 commentQuestion 78: An Apex trigger creates an order co record every time an Opp...

- Question 79: Given a list of Opportunity records named opportunityList, w...

- Question 80: The use of the transient keyword in Visualforce page control...

- 3 commentQuestion 81: Refer to the following code snippet: (Exhibit) A developer c...

- Question 82: Universal Containers develops a Visualforce page that requir...

- 1 commentQuestion 83: Given the following code: (Exhibit) Assuming there were 10 C...

- Question 84: Salesforce users consistently receive a "Maximum trigger dep...

- 1 commentQuestion 85: Universal Containers ne=ds to integrate with several externa...

- Question 86: Consider the Apex class below that defines a RemoteAction us...

- 1 commentQuestion 87: Refer to the Lightning component below: (Exhibit) The Lightn...

- 1 commentQuestion 88: A company wants to incorporate a third-party weh service to ...

- Question 89: How can a developer efficiently incorporate multiple JavaScr...

- 4 commentQuestion 90: A developer is asked to modify a Lightning web component so ...

Recent Comments (The most recent comments are at the top.)

I think this is wrong , and the correct answer is B , here is why :

In large datasets, queries with OR conditions often result in full table scans because they can prevent the database from efficiently using indexes.

So By breaking the query into two individual queries (one filtering on CreatedDate and one on RecordTypeId), each query can leverage indexes more effectively, improving performance , altough it will costs you to write a few lines of codes , but it worth

B is correct. The 'OR' in a query will make the query un-selective, especially quering a large of records.Breaking down the query into two parts will get two selective query.