<< Prev Question Next Question >>

Question 44/51

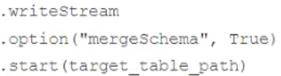

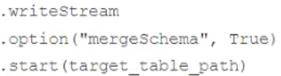

In order to facilitate near real-time workloads, a data engineer is creating a helper function to Get Latest & Actual Certified-Data-Engineer-Professional Exam's Question and Answers from leverage the schema detection and evolution functionality of Databricks Auto Loader. The desired function will automatically detect the schema of the source directly, incrementally process JSON files as they arrive in a source directory, and automatically evolve the schema of the table when new fields are detected.

The function is displayed below with a blank:

Which response correctly fills in the blank to meet the specified requirements?

The function is displayed below with a blank:

Which response correctly fills in the blank to meet the specified requirements?