<< Prev Question Next Question >>

Question 34/51

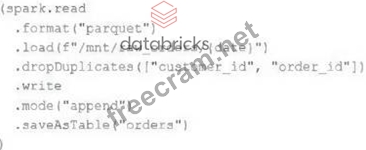

An upstream source writes Parquet data as hourly batches to directories named with the current date. A nightly batch job runs the following code to ingest all data from the previous day as indicated by the date variable:

Get Latest & Actual Certified-Data-Engineer-Professional Exam's Question and Answers from

Assume that the fields customer_id and order_id serve as a composite key to uniquely identify each order.

If the upstream system is known to occasionally produce duplicate entries for a single order hours apart, which statement is correct?

Get Latest & Actual Certified-Data-Engineer-Professional Exam's Question and Answers from

Assume that the fields customer_id and order_id serve as a composite key to uniquely identify each order.

If the upstream system is known to occasionally produce duplicate entries for a single order hours apart, which statement is correct?