- Home

- Microsoft

- Designing and Implementing a Data Science Solution on Azure

- Microsoft.DP-100.v2025-07-26.q183

- Question 50

Valid DP-100 Dumps shared by ExamDiscuss.com for Helping Passing DP-100 Exam! ExamDiscuss.com now offer the newest DP-100 exam dumps, the ExamDiscuss.com DP-100 exam questions have been updated and answers have been corrected get the newest ExamDiscuss.com DP-100 dumps with Test Engine here:

Access DP-100 Dumps Premium Version

(519 Q&As Dumps, 35%OFF Special Discount Code: freecram)

<< Prev Question Next Question >>

Question 50/183

You create a batch inference pipeline by using the Azure ML SDK. You run the pipeline by using the following code:

from azureml.pipeline.core import Pipeline

from azureml.core.experiment import Experiment

pipeline = Pipeline(workspace=ws, steps=[parallelrun_step])

pipeline_run = Experiment(ws, 'batch_pipeline').submit(pipeline)

You need to monitor the progress of the pipeline execution.

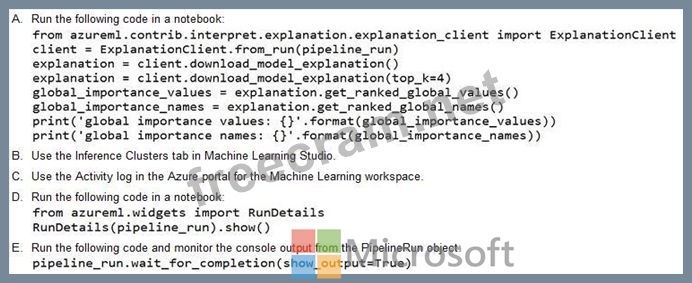

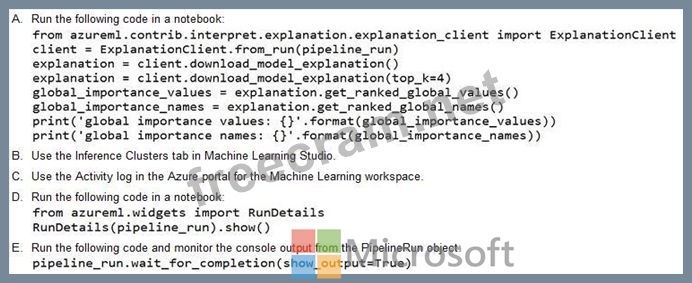

What are two possible ways to achieve this goal? Each correct answer presents a complete solution.

NOTE: Each correct selection is worth one point.

from azureml.pipeline.core import Pipeline

from azureml.core.experiment import Experiment

pipeline = Pipeline(workspace=ws, steps=[parallelrun_step])

pipeline_run = Experiment(ws, 'batch_pipeline').submit(pipeline)

You need to monitor the progress of the pipeline execution.

What are two possible ways to achieve this goal? Each correct answer presents a complete solution.

NOTE: Each correct selection is worth one point.

Correct Answer: D,E

A batch inference job can take a long time to finish. This example monitors progress by using a Jupyter widget. You can also manage the job's progress by using:

Azure Machine Learning Studio.

Console output from the PipelineRun object.

from azureml.widgets import RunDetails

RunDetails(pipeline_run).show()

pipeline_run.wait_for_completion(show_output=True)

Reference:

https://docs.microsoft.com/en-us/azure/machine-learning/how-to-use-parallel-run-step#monitor-the-parallel- run-job

Azure Machine Learning Studio.

Console output from the PipelineRun object.

from azureml.widgets import RunDetails

RunDetails(pipeline_run).show()

pipeline_run.wait_for_completion(show_output=True)

Reference:

https://docs.microsoft.com/en-us/azure/machine-learning/how-to-use-parallel-run-step#monitor-the-parallel- run-job

- Question List (183q)

- Question 1: You need to configure the Edit Metadata module so that the s...

- Question 2: You create a binary classification model. You need to evalua...

- Question 3: You have a dataset that contains records of patients tested ...

- Question 4: You manage an Azure Machine Learning workspace. The developm...

- Question 5: You are evaluating a Python NumPy array that contains six da...

- Question 6: Note: This question is part of a series of questions that pr...

- Question 7: You have an Azure Machine Learning workspace. You plan to tu...

- Question 8: You create an Azure Machine learning workspace. You are use ...

- Question 9: You have an Azure Machine Learning workspace. You run the fo...

- Question 10: You create an Azure Machine Learning workspace and a new Azu...

- Question 11: You have an Azure Machine Learning workspace named Workspace...

- Question 12: You manage an Azure Machine Learning workspace named projl Y...

- Question 13: You are implementing a machine learning model to predict sto...

- Question 14: You have an Azure Machine Learning workspace. You plan to tu...

- Question 15: You manage an Azure Machine Learning workspace. You have an ...

- Question 16: You register the following versions of a model. (Exhibit) Yo...

- Question 17: You are tuning a hyperparameter for an algorithm. The follow...

- Question 18: You use Azure Machine Learning to train a model based on a d...

- Question 19: You are performing a classification task in Azure Machine Le...

- Question 20: You manage an Azure Machine Learning workspace named Workspa...

- Question 21: You manage an Azure Machine Learning workspace That has an A...

- Question 22: You create an Azure Machine Learning workspace. You train an...

- Question 23: You have a dataset that contains over 150 features. You use ...

- Question 24: You need to resolve the local machine learning pipeline perf...

- Question 25: You use Azure Machine Learning designer to create a real-tim...

- Question 26: Note: This question is part of a series of questions that pr...

- Question 27: You must use in Azure Data Science Virtual Machine (DSVM) as...

- Question 28: You manage an Azure Machine Learning workspace named workspa...

- Question 29: You manage an Azure Machine Learning workspace. You configur...

- Question 30: You have an Azure Machine Learning workspace. You plan to us...

- Question 31: You use the Azure Machine Learning designer to create and ru...

- Question 32: You are creating a machine learning model in Python. The pro...

- Question 33: You use Azure Machine Learning Studio to build a machine lea...

- Question 34: You create an Azure Machine Learning workspace. You plan to ...

- Question 35: You create machine learning models by using Azure Machine Le...

- Question 36: You create a Python script named train.py and save it in a f...

- Question 37: You need to define a process for penalty event detection. Wh...

- Question 38: You are using Azure Machine Learning to train machine learni...

- Question 39: You are building recurrent neural network to perform a binar...

- Question 40: You create a new Azure Machine Learning workspace with a com...

- Question 41: You create an Azure Machine Learning pipeline named pipeline...

- Question 42: You use the Azure Machine Learning designer to create and ru...

- Question 43: You create an Azure Machine Learning dataset containing auto...

- Question 44: You are analyzing the asymmetry in a statistical distributio...

- Question 45: You manage an Azure Machine Learning workspace. You experime...

- Question 46: You are evaluating a completed binary classification machine...

- Question 47: You create a multi-class image classification model with aut...

- Question 48: You are creating a machine learning model. You have a datase...

- Question 49: You have an Azure Machine Learning workspace You plan to use...

- Question 50: You create a batch inference pipeline by using the Azure ML ...

- Question 51: You are using Azure Machine Learning to monitor a trained an...

- Question 52: You need to configure the Feature Based Feature Selection mo...

- Question 53: You have an Azure Machine Learning workspace named workspace...

- Question 54: You train a machine learning model by using Aunt Machine Lea...

- Question 55: You are developing a linear regression model in Azure Machin...

- Question 56: You create an Azure Machine Learning workspace You are devel...

- Question 57: You manage an Azure Machine Learning workspace. The developm...

- Question 58: You have a Jupyter Notebook that contains Python code that i...

- Question 59: Note: This question is part of a series of questions that pr...

- Question 60: You have a binary classifier that predicts positive cases of...

- Question 61: You download a .csv file from a notebook in an Azure Machine...

- Question 62: You have a multi-class image classification deep learning mo...

- Question 63: You create an Azure Machine Learning workspace. The workspac...

- Question 64: You train a machine learning model. You must deploy the mode...

- Question 65: You manage an Azure Machine Learning workspace. You must set...

- Question 66: You are authoring a notebook in Azure Machine Learning studi...

- Question 67: You are using the Azure Machine Learning designer to transfo...

- Question 68: You plan to implement an Azure Machine Learning solution. Yo...

- Question 69: You create a binary classification model. The model is regis...

- Question 70: You plan to run a script as an experiment using a Script Run...

- Question 71: You create a multi-class image classification deep learning ...

- Question 72: You are developing a hands-on workshop to introduce Docker f...

- Question 73: You are creating a new Azure Machine Learning pipeline using...

- Question 74: (Exhibit) For each of the following statements, select Yes i...

- Question 75: You plan to use Hyperdrive to optimize the hyperparameters s...

- Question 76: Your Azure Machine Learning workspace has a dataset named re...

- Question 77: You manage an Azure Machine Learning workspace. You need to ...

- Question 78: You use a training pipeline in the Azure Machine Learning de...

- Question 79: Note: This question is part of a series of questions that pr...

- Question 80: You have an Azure Machine Learning workspace. You are runnin...

- Question 81: You have several machine learning models registered in an Az...

- Question 82: You are performing sentiment analysis using a CSV file that ...

- Question 83: You create a multi-class image classification deep learning ...

- Question 84: You create an Azure Machine Learning model to include model ...

- Question 85: You have an Azure Machine Learning workspace named Workspace...

- Question 86: You arc creating a new experiment in Azure Machine Learning ...

- Question 87: You need to select a feature extraction method. Which method...

- Question 88: You are building an experiment using the Azure Machine Learn...

- Question 89: You create an Azure Machine Learning workspace. You train a ...

- Question 90: Note: This question is part of a series of questions that pr...

- Question 91: You need to correct the model fit issue. Which three actions...

- Question 92: You have a dataset that is stored m an Azure Machine Learnin...

- Question 93: You are building a regression model tot estimating the numbe...

- Question 94: You are performing feature scaling by using the scikit-learn...

- Question 95: You are a lead data scientist for a project that tracks the ...

- Question 96: You are developing deep learning models to analyze semi-stru...

- Question 97: You have an Azure Machine learning workspace. The workspace ...

- Question 98: You are implementing hyperparameter tuning for a model train...

- Question 99: You need to configure the Permutation Feature Importance mod...

- Question 100: Note: This question is part of a series of questions that pr...

- Question 101: You manage an Azure Machine Learning workspace. You create a...

- Question 102: Note: This question is part of a series of questions that pr...

- Question 103: You create a Python script that runs a training experiment i...

- Question 104: Note: This question is part of a series of questions that pr...

- Question 105: You are creating a binary classification by using a two-clas...

- Question 106: You create a machine learning model by using the Azure Machi...

- Question 107: Note: This question is part of a series of questions that pr...

- Question 108: Note: This question is part of a series of questions that pr...

- Question 109: You are using C-Support Vector classification to do a multi-...

- Question 110: You are running a training experiment on remote compute in A...

- Question 111: You use the Azure Machine Learning Python SDK to create a ba...

- Question 112: You are a data scientist working for a hotel booking website...

- Question 113: You manage an Azure Machine Learning workspace. You plan to ...

- Question 114: You plan to use a Deep Learning Virtual Machine (DLVM) to tr...

- Question 115: Note: This question is part of a series of questions that pr...

- Question 116: You need to produce a visualization for the diagnostic test ...

- Question 117: You use Azure Machine Learning Studio to build a machine lea...

- Question 118: You are creating a compute target to train a machine learnin...

- Question 119: A biomedical research company plans to enroll people in an e...

- Question 120: space and set up a development environment. You plan to trai...

- Question 121: You plan to deliver a hands-on workshop to several students....

- Question 122: You plan to preprocess text from CSV files. You load the Azu...

- Question 123: You have a comma-separated values (CSV) file containing data...

- Question 124: You deploy a model as an Azure Machine Learning real-time we...

- Question 125: You are preparing to use the Azure ML SDK to run an experime...

- Question 126: You use the Azure Machine Learning Python SDK to define a pi...

- Question 127: You have an Azure Machine Learning workspace. You are runnin...

- Question 128: You manage an Azure Machine Learning workspace by using the ...

- Question 129: You create an Azure Machine Learning workspace. You must cre...

- Question 130: Note: This question is part of a series of questions that pr...

- Question 131: You develop and train a machine learning model to predict fr...

- Question 132: You tram and register a model by using the Azure Machine Lea...

- Question 133: You use the Azure Machine learning SDK foe Python to create ...

- Question 134: Note: This question is part of a series of questions that pr...

- Question 135: You manage an Azure Machine Learning workspace. An MLflow mo...

- Question 136: You create a binary classification model. You use the Fairle...

- Question 137: You manage an Azure Machine learning workspace named workspa...

- Question 138: You manage an Azure Machine learning workspace. You develop ...

- Question 139: You use an Azure Machine Learning workspace. You have a trai...

- Question 140: You load data from a notebook in an Azure Machine Learning w...

- Question 141: Note: This question is part of a series of questions that pr...

- Question 142: You create a multi-class image classification deep learning ...

- Question 143: You have an Azure Machine Learning workspace. You plan to ru...

- Question 144: You train a classification model by using a decision tree al...

- Question 145: You create an Azure Machine Learning workspace. You must con...

- Question 146: Note: This question is part of a series of questions that pr...

- Question 147: You write five Python scripts that must be processed in the ...

- Question 148: Your team is building a data engineering and data science de...

- Question 149: You are performing clustering by using the K-means algorithm...

- Question 150: You plan to create a speech recognition deep learning model....

- Question 151: You are developing a deep learning model by using TensorFlow...

- Question 152: Note: This question is part of a series of questions that pr...

- Question 153: You have an Azure Machine Learning workspace. You build a de...

- Question 154: You use an Azure Machine Learning workspace. You must monito...

- Question 155: You manage an Azure Machine Learning workspace named workspa...

- Question 156: You are creating an experiment by using Azure Machine Learni...

- Question 157: Note: This question is part of a series of questions that pr...

- Question 158: You are using Azure Machine Learning to monitor a trained an...

- Question 159: You register a file dataset named csvjolder that references ...

- Question 160: You need to implement a scaling strategy for the local penal...

- Question 161: You must store data in Azure Blob Storage to support Azure M...

- Question 162: You configure a Deep Learning Virtual Machine for Windows. Y...

- Question 163: you create an Azure Machine learning workspace named workspa...

- Question 164: You use the designer to create a training pipeline for a cla...

- Question 165: You create an Azure Machine Learning compute resource to tra...

- Question 166: You have an Azure Machine Learning (ML) model deployed to an...

- Question 167: You create an Azure Machine Learning pipeline named pipeline...

- Question 168: You are developing a machine learning model. You must infere...

- Question 169: You plan to deliver a hands-on workshop to several students....

- Question 170: You are working on a classification task. You have a dataset...

- Question 171: You plan to run a Python script as an Azure Machine Learning...

- Question 172: You need to visually identify whether outliers exist in the ...

- Question 173: You plan to use the Hyperdrive feature of Azure Machine Lear...

- Question 174: You create an Azure Machine Learning workspace and install t...

- Question 175: You plan to use a Data Science Virtual Machine (DSVM) with t...

- Question 176: You deploy a real-time inference service for a trained model...

- Question 177: You create an Azure Machine Learning workspace named ML-work...

- Question 178: You create and register a model in an Azure Machine Learning...

- Question 179: You create a script that trains a convolutional neural netwo...

- Question 180: You manage an Azure Machine Learning workspace. You train a ...

- Question 181: You are solving a classification task. You must evaluate you...

- Question 182: You manage an Azure Machine Learning workspace. You plan to ...

- Question 183: You have a Python script that executes a pipeline. The scrip...