Valid AI-900 Dumps shared by ExamDiscuss.com for Helping Passing AI-900 Exam! ExamDiscuss.com now offer the newest AI-900 exam dumps, the ExamDiscuss.com AI-900 exam questions have been updated and answers have been corrected get the newest ExamDiscuss.com AI-900 dumps with Test Engine here:

Access AI-900 Dumps Premium Version

(325 Q&As Dumps, 35%OFF Special Discount Code: freecram)

<< Prev Question Next Question >>

Question 108/124

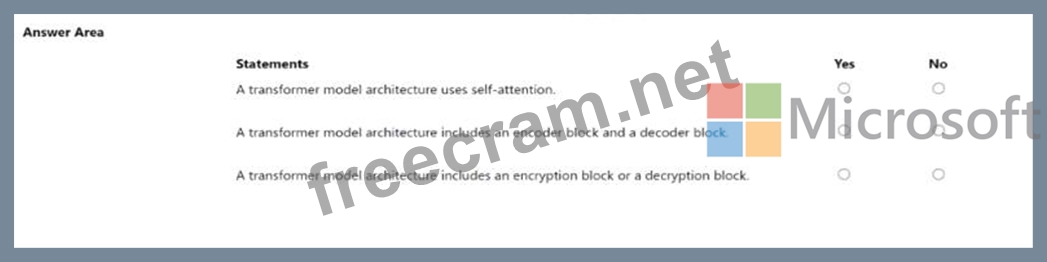

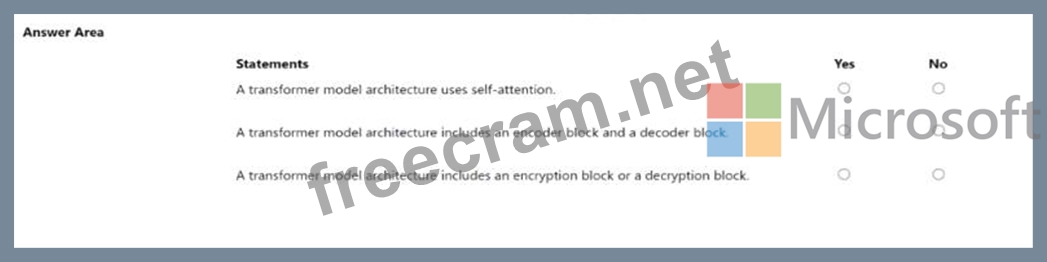

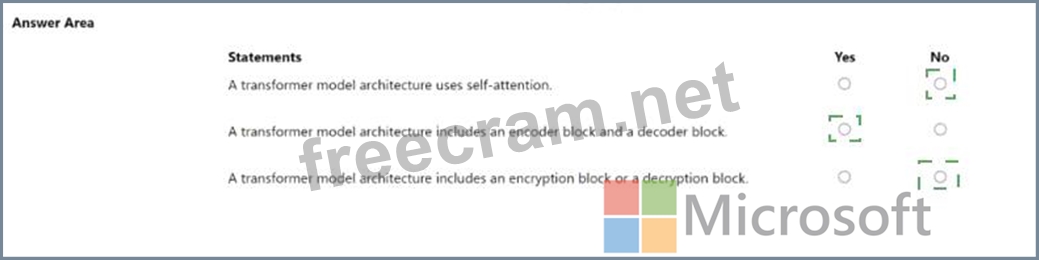

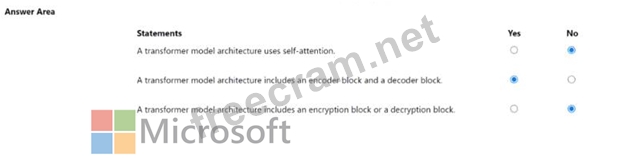

For each of the following statements, select Yes if the statement is true. Otherwise, select No. NOTE: Each correct selection is worth one point.

Correct Answer:

Explanation:

- Question List (124q)

- 1 commentQuestion 1: For each of the following statements, select Yes if the stat...

- Question 2: To complete the sentence, select the appropriate option in t...

- 1 commentQuestion 3: You need to create a customer support solution to help custo...

- Question 4: For each of the following statements. select Yes if the stat...

- Question 5: Select the answer that correctly completes the sentence. (Ex...

- Question 6: For each of the following statements, select Yes if the stat...

- Question 7: Which two components can you drag onto a canvas in Azure Mac...

- Question 8: Match the facial recognition tasks to the appropriate questi...

- Question 9: Select the answer that correctly completes the sentence. (Ex...

- Question 10: Select the answer that correctly completes the sentence. (Ex...

- Question 11: You plan to apply Text Analytics API features to a technical...

- Question 12: For each of the following statements, select Yes If the stat...

- 1 commentQuestion 13: For each of the following statements, select Yes if the stat...

- Question 14: Select the answer that correctly completes the sentence. (Ex...

- Question 15: Select the answer that correctly completes the sentence. (Ex...

- Question 16: Which type of machine learning should you use to identify gr...

- Question 17: For each of the following statements, select Yes if the stat...

- Question 18: You run a charity event that involves posting photos of peop...

- Question 19: Your company is exploring the use of voice recognition techn...

- Question 20: For each of the following statements, select Yes if the stat...

- Question 21: Select the answer that correctly completes the sentence. (Ex...

- 1 commentQuestion 22: Which action can be performed by using the Azure Al Vision s...

- 1 commentQuestion 23: You need to create a model that labels a collection of your ...

- Question 24: You use Azure Machine Learning designer to build a model pip...

- Question 25: You need to predict the income range of a given customer by ...

- Question 26: For each of the following statements, select Yes if the stat...

- Question 27: You need to predict the animal population of an area. Which ...

- Question 28: A medical research project uses a large anonymized dataset o...

- Question 29: You are developing a conversational AI solution that will co...

- Question 30: Which two languages can you use to write custom code for Azu...

- 2 commentQuestion 31: For each of the following statements, select Yes if the stat...

- Question 32: You have the following dataset. (Exhibit) You plan to use th...

- Question 33: To complete the sentence, select the appropriate option in t...

- Question 34: Which service should you use to extract text, key/value pair...

- Question 35: To complete the sentence, select the appropriate option in t...

- Question 36: You need to develop a mobile app for employees to scan and s...

- Question 37: Your website has a chatbot to assist customers. You need to ...

- Question 38: You are developing a solution that uses the Text Analytics s...

- Question 39: Which parameter should you configure to produce more verbose...

- Question 40: You need to analyze images of vehicles on a highway and meas...

- Question 41: For each of the following statements, select Yes if the stat...

- Question 42: To complete the sentence, select the appropriate option in t...

- 1 commentQuestion 43: Match the computer vision service to the appropriate Al work...

- 1 commentQuestion 44: For each of the following statements, select Yes if the stat...

- Question 45: For each of the following statements, select Yes if the stat...

- Question 46: Which action can be performed by using the Azure Al Vision s...

- Question 47: To complete the sentence, select the appropriate option in t...

- Question 48: Select the answer that correctly completes the sentence. (Ex...

- Question 49: For each of the following statements, select Yes if the stat...

- Question 50: You need to build an app that will identify celebrities in i...

- Question 51: For each of the following statements, select Yes if the stat...

- Question 52: You use Azure Machine Learning designer to publish an infere...

- 1 commentQuestion 53: Match the services to the appropriate descriptions. To answe...

- Question 54: Which type of natural language processing (NLP) entity is us...

- Question 55: For each of the following statements, select Yes if the stat...

- Question 56: You need to convert receipts into transactions in a spreadsh...

- Question 57: For each of the following statements, select Yes if the stat...

- Question 58: To complete the sentence, select the appropriate option in t...

- Question 59: When training a model, why should you randomly split the row...

- Question 60: You need to make the press releases of your company availabl...

- Question 61: Select the answer that correctly completes the sentence. (Ex...

- Question 62: You have the Predicted vs. True chart shown in the following...

- 1 commentQuestion 63: You are building an AI system. Which task should you include...

- Question 64: You need to reduce the load on telephone operators by implem...

- Question 65: What is an advantage of using a custom model in Form Recogni...

- Question 66: Which two scenarios are examples of a conversational AI work...

- Question 67: You plan to build a conversational Al solution that can be s...

- Question 68: You are designing a system that will generate insurance quot...

- Question 69: You plan to deploy an Azure Machine Learning model as a serv...

- Question 70: To complete the sentence, select the appropriate option in t...

- Question 71: What are two tasks that can be performed by using computer v...

- Question 72: Select the answer that correctly completes the sentence. (Ex...

- 1 commentQuestion 73: A smart device that responds to the question. "What is the s...

- Question 74: You have a frequently asked questions (FAQ) PDF file. You ne...

- Question 75: When you design an AI system to assess whether loans should ...

- Question 76: You need to develop a web-based AI solution for a customer s...

- Question 77: You need to provide customers with the ability to query the ...

- Question 78: Match the types of machine learning to the appropriate scena...

- Question 79: Which OpenAI model does GitHub Copilot use to make suggestio...

- Question 80: You have an Al solution that provides users with the ability...

- Question 81: Select the answer that correctly completes the sentence. (Ex...

- Question 82: You need to create a clustering model and evaluate the model...

- Question 83: You are processing photos of runners in a race. You need to ...

- Question 84: You need to identify groups of rows with similar numeric val...

- Question 85: Match the types of computer vision to the appropriate scenar...

- Question 86: You need to track multiple versions of a model that was trai...

- Question 87: You have the process shown in the following exhibit. (Exhibi...

- Question 88: You build a machine learning model by using the automated ma...

- Question 89: You need to predict the sea level in meters for the next 10 ...

- Question 90: Which two tools can you use to call the Azure OpenAI service...

- Question 91: For each of the following statements, select Yes if the stat...

- Question 92: For each of the following statements, select Yes if the stat...

- Question 93: For each of the following statements, select Yes if the stat...

- Question 94: An app that analyzes social media posts to identify their to...

- Question 95: For each of the following statements, select Yes if the stat...

- Question 96: You need to reduce the load on telephone operators by implem...

- Question 97: You have an app that identifies the coordinates of a product...

- Question 98: You are designing an AI system that empowers everyone, inclu...

- Question 99: Select the answer that correctly completes the sentence. (Ex...

- Question 100: Which metric can you use to evaluate a classification model?...

- Question 101: Match the types of computer vision workloads to the appropri...

- 2 commentQuestion 102: You have a natural language processing (NIP) model that was ...

- Question 103: Select the answer that correctly completes the sentence. (Ex...

- Question 104: For each of the following statements, select Yes if the stat...

- Question 105: Which statement is an example of a Microsoft responsible AJ ...

- Question 106: Match the types of AI workloads to the appropriate scenarios...

- Question 107: In which two scenarios can you use the Form Recognizer servi...

- 1 commentQuestion 108: For each of the following statements, select Yes if the stat...

- Question 109: For each of the following statements, select Yes if the stat...

- Question 110: You plan to use Azure Cognitive Services to develop a voice ...

- Question 111: Select the . (Exhibit)

- Question 112: Which AI service can you use to interpret the meaning of a u...

- Question 113: Match the types of AI workloads to the appropriate scenarios...

- Question 114: You have an app that identifies birds in images. The app per...

- Question 115: You are authoring a Language Understanding (LUIS) applicatio...

- 1 commentQuestion 116: You need to identify street names based on street signs in p...

- Question 117: To complete the sentence, select the appropriate option in t...

- Question 118: What is an example of unsupervised machine learning?...

- Question 119: Match the Azure Al service to the appropriate actions. To an...

- Question 120: You need to develop a chatbot for a website. The chatbot mus...

- Question 121: To complete the sentence, select the appropriate option in t...

- 1 commentQuestion 122: For each of the following statements, select Yes if the stat...

- Question 123: You have a custom question answering solution. You create a ...

- Question 124: For each of the following statements, select Yes if the stat...

Recent Comments (The most recent comments are at the top.)

Gimini and deepseek answer Yes, Yes, No

Let's analyze each statement about transformer models:

Statement 1: A transformer model architecture uses self-attention.

Answer: Yes

Explanation: Self-attention is a fundamental and defining characteristic of transformer models. It allows the model to weigh the importance of different parts of the input sequence when processing it, capturing long-range dependencies and relationships within the data.

Statement 2: A transformer model architecture includes an encoder block and a decoder block.

Answer: Yes

Explanation: The typical transformer architecture includes both an encoder and a decoder. The encoder processes the input sequence, and the decoder generates the output sequence. Both blocks are composed of multiple layers, each containing self-attention mechanisms and other sub-layers.

Statement 3: A transformer model architecture includes an encryption block or a decryption block.

Answer: No

Explanation: While transformers are used in some cryptographic applications, encryption and decryption are not inherent components of the standard transformer architecture. Transformers are primarily designed for sequence-to-sequence tasks like natural language processing, not for cryptographic operations. The "encoder" and "decoder" terminology in transformers is related to how they process input and generate output sequences, not to encryption/decryption....