- Home

- Oracle

- Oracle Big Data 2017 Implementation Essentials

- Oracle.1z0-449.v2018-04-15.q72

- Question 72

Valid 1z0-449 Dumps shared by ExamDiscuss.com for Helping Passing 1z0-449 Exam! ExamDiscuss.com now offer the newest 1z0-449 exam dumps, the ExamDiscuss.com 1z0-449 exam questions have been updated and answers have been corrected get the newest ExamDiscuss.com 1z0-449 dumps with Test Engine here:

Access 1z0-449 Dumps Premium Version

(72 Q&As Dumps, 35%OFF Special Discount Code: freecram)

<< Prev Question

Question 72/72

Your customer has 10 web servers that generate logs at any given time. The customer would like to consolidate and load this data as it is generated into HDFS on the Big Data Appliance.

Which option should the customer use?

Which option should the customer use?

Correct Answer: C

Explanation/Reference:

Apache Flume is a distributed, reliable, and available system for efficiently collecting, aggregating and moving large amounts of log data from many different sources to a centralized data store.

The use of Apache Flume is not only restricted to log data aggregation. Since data sources are customizable, Flume can be used to transport massive quantities of event data including but not limited to network traffic data, social-media-generated data, email messages and pretty much any data source possible.

Example:

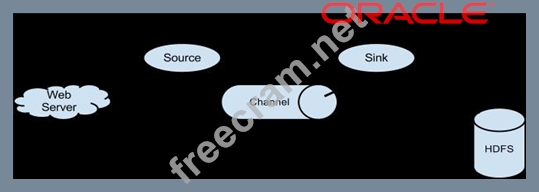

A Flume source consumes events delivered to it by an external source like a web server. The external source sends events to Flume in a format that is recognized by the target Flume source.

When a Flume source receives an event, it stores it into one or more channels. The channel is a passive store that keeps the event until it's consumed by a Flume sink. The file channel is one example - it is backed by the local filesystem. The sink removes the event from the channel and puts it into an external repository like HDFS (via Flume HDFS sink) or forwards it to the Flume source of the next Flume agent (next hop) in the flow. The source and sink within the given agent run asynchronously with the events staged in the channel.

Incorrect Answers:

A: ZooKeeper is a centralized service for maintaining configuration information, naming, providing distributed synchronization, and providing group services. All of these kinds of services are used in some form or another by distributed applications. Each time they are implemented there is a lot of work that goes into fixing the bugs and race conditions that are inevitable. Because of the difficulty of implementing these kinds of services, applications initially usually skimp on them, which make them brittle in the presence of change and difficult to manage.

References: https://flume.apache.org/FlumeUserGuide.html

Apache Flume is a distributed, reliable, and available system for efficiently collecting, aggregating and moving large amounts of log data from many different sources to a centralized data store.

The use of Apache Flume is not only restricted to log data aggregation. Since data sources are customizable, Flume can be used to transport massive quantities of event data including but not limited to network traffic data, social-media-generated data, email messages and pretty much any data source possible.

Example:

A Flume source consumes events delivered to it by an external source like a web server. The external source sends events to Flume in a format that is recognized by the target Flume source.

When a Flume source receives an event, it stores it into one or more channels. The channel is a passive store that keeps the event until it's consumed by a Flume sink. The file channel is one example - it is backed by the local filesystem. The sink removes the event from the channel and puts it into an external repository like HDFS (via Flume HDFS sink) or forwards it to the Flume source of the next Flume agent (next hop) in the flow. The source and sink within the given agent run asynchronously with the events staged in the channel.

Incorrect Answers:

A: ZooKeeper is a centralized service for maintaining configuration information, naming, providing distributed synchronization, and providing group services. All of these kinds of services are used in some form or another by distributed applications. Each time they are implemented there is a lot of work that goes into fixing the bugs and race conditions that are inevitable. Because of the difficulty of implementing these kinds of services, applications initially usually skimp on them, which make them brittle in the presence of change and difficult to manage.

References: https://flume.apache.org/FlumeUserGuide.html

- Question List (72q)

- Question 1: Your customer needs to manage configuration information on t...

- Question 2: Identify two ways to create an external table to access Hive...

- Question 3: Your customer has an older starter rack Big Data Appliance (...

- Question 4: Which command should you use to view the contents of the HDF...

- Question 5: What two actions do the following commands perform in the Or...

- Question 6: You need to place the results of a PigLatin script into an H...

- Question 7: Your customer needs to move data from Hive to the Oracle dat...

- Question 8: How does increasing the number of storage nodes and shards i...

- Question 9: What access driver does the Oracle SQL Connector for HDFS us...

- Question 10: The NoSQL KVStore experiences a node failure. One of the rep...

- Question 11: Your customer uses LDAP for centralized user/group managemen...

- Question 12: Your customer collects diagnostic data from its storage syst...

- Question 13: What is the result when a flume event occurs for the followi...

- Question 14: Your customer has a requirement to use Oracle Database to ac...

- Question 15: How should you control the Sqoop parallel imports if the dat...

- Question 16: Your customer's Oracle NoSQL store has a replication factor ...

- Question 17: Your customer uses Active Directory to manage user accounts....

- Question 18: Your customer needs to analyze large numbers of log files af...

- Question 19: Your customer has purchased Big Data SQL and wants to set it...

- Question 20: You recently set up a customer's Big Data Appliance. At the ...

- Question 21: The hdfs_streamscript is used by the Oracle SQL Connector fo...

- Question 22: The log data for your customer's Apache web server has seven...

- Question 23: Your customer needs to access Hive tables, HDFS, and Data Pu...

- Question 24: The Hadoop NameNode is running on port #3001, the DataNode o...

- Question 25: Your customer wants to implement the Oracle Table Access for...

- Question 26: You want to set up access control lists on your NameNode in ...

- Question 27: What are two of the main steps for setting up Oracle XQuery ...

- Question 28: How should you encrypt the Hadoop data that sits on disk?...

- Question 29: Your customer is worried that the redundancy of HDFS will no...

- Question 30: During a meeting with your customer's IT security team, you ...

- Question 31: Your customer's security team needs to understand how the Or...

- Question 32: Your customer's Hadoop cluster displays an error while runni...

- Question 33: What does the flume sink do in a flume configuration? (Exhib...

- Question 34: Your customer is spending a lot of money on archiving data t...

- Question 35: You need to create an architecture for your customer's Oracl...

- Question 36: Your customer is setting up an external table to provide rea...

- Question 37: Your customer completed all the Kerberos installation prereq...

- Question 38: Which statement is true about the NameNode in Hadoop?...

- Question 39: Your customer wants to architect a system that helps to make...

- Question 40: You are working with a client who does not allow the storage...

- Question 41: What is the output of the following six commands when they a...

- Question 42: Identify two features of the Hadoop Distributed File System ...

- Question 43: What two things does the Big Data SQL push down to the stora...

- Question 44: For Oracle R Advanced Analytics for Hadoop to access the dat...

- Question 45: How is Oracle Loader for Hadoop (OLH) better than Apache Sqo...

- Question 46: How does the Sqoop Server handle a job that was discontinued...

- Question 47: What are the two roles performed by the Big Data Appliance a...

- Question 48: Your customer keeps getting an error when writing a key/valu...

- Question 49: What are two reasons that a MapReduce job is not run when th...

- Question 50: Your customer has three XML files in HDFS with the following...

- Question 51: Your customer is experiencing significant degradation in the...

- Question 52: What happens if an active NameNode fails in the Oracle Big D...

- Question 53: Which user in the following set of ACL entries has read, wri...

- Question 54: You are helping your customer troubleshoot the use of the Or...

- Question 55: Your customer has a Big Data Appliance and an Exadata Databa...

- Question 56: What kind of workload is MapReduce designed to handle?...

- Question 57: Your customer receives data in JSON format. Which option sho...

- Question 58: (Exhibit) What are three correct results of executing the pr...

- Question 59: Your customer wants you to set up ODI by using the IKM SQL t...

- Question 60: How does the Oracle SQL Connector for HDFS access HDFS data ...

- Question 61: Your customer's IT staff is made up mostly of SQL developers...

- Question 62: Which three pieces of hardware are present on each node of t...

- Question 63: Your customer has had a security breach on its running Big D...

- Question 64: Your customer needs the data that is generated from social m...

- Question 65: You are attempting to start KVLite but get an error about th...

- Question 66: Identify two valid steps for setting up a Hive-to-Hive trans...

- Question 67: Your customer is using the IKM SQL to HDFS File (Sqoop) modu...

- Question 68: What does the following line do in Apache Pig? products = LO...

- Question 69: What are the two advantages of using Hive over MapReduce? (C...

- Question 70: What is the main purpose of the Oracle Loader for Hadoop (OL...

- Question 71: Which parameter setting will force Impala to execute queries...

- Question 72: Your customer has 10 web servers that generate logs at any g...